Parquet Files as a Datastore

Introduction

In this blog we will see the usage of parquet file as a datasource using Spark framework to understand what makes it an attractive solution as a file based datasource when compared to other file storage types.

Apache Parquet

Apache Parquet is an open source, column-oriented data file format designed for efficient data storage and retrieval. It provides competent data compression and encoding schemes with enhanced performance to handle complex data in bulk. Apache Parquet is designed to be a common interchange format for both batch and interactive workloads.

- Fast queries that can fetch specific column values without reading full row data.

- Highly efficient column-wise compression

- High compatibility with OLAP

Parquet was built with complex nested data structures in mind and uses the record shredding and assembly algorithm. Compression schemes need to be specified on a per-column level and is future-proofed to allow adding more encodings as they are invented and implemented.

Characteristics

It is a free and open-source file format, under the Apache license group. It is a language agnostic which helps in using this format in cross platform and cross language scenarios.

Unlike other file storage formats which store in rows, parquet data is organized by columns. It can be used effectively in OLAP use cases, where huge data should be searched through.

Efficient data compression and decompression techniques are followed which occupies less space when compared to other file formats to store same amount of data. It can also be used to store data with complex data structure.

Benefits

- Ideal for storing diverse bigdata like structured data tables, images, videos, and documents.

- Savings on storage space as it uses efficient column-wise compression techniques with flexible encoding schemes.

- Improved data throughput and performance whereby queries that fetch specific column values without reading entire row.

Implementation

We use a simple ‘Java – Maven’ project with jdk 17 and Spark (3.3.2). The program is segregated into 2 functions –

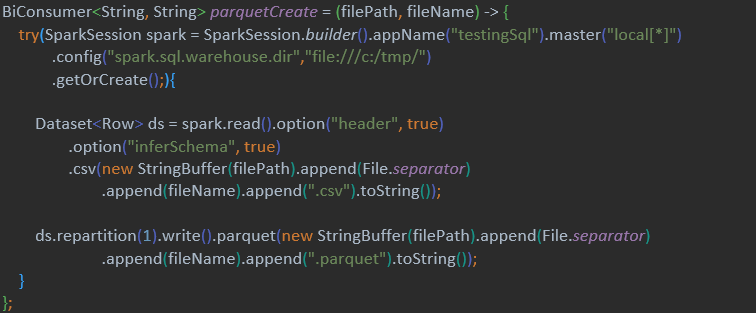

- Generate parquet – Takes source directory and filename as an input. The program will use the source from this file to generate a parquet file with the data.

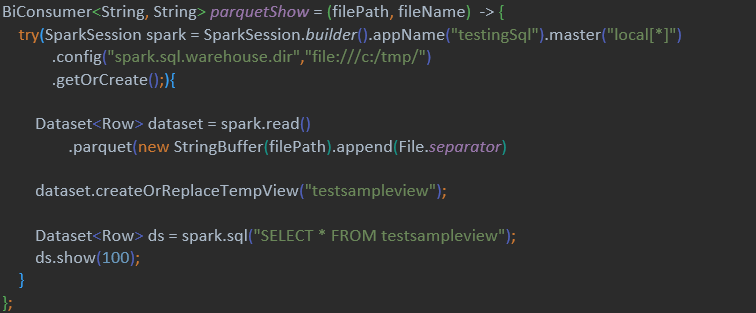

- Query parquet – Takes source directory and filename as an input. The program will use the provided details to query the parquet files present in the directory. It utilizes Spark SQL to perform the search operation.

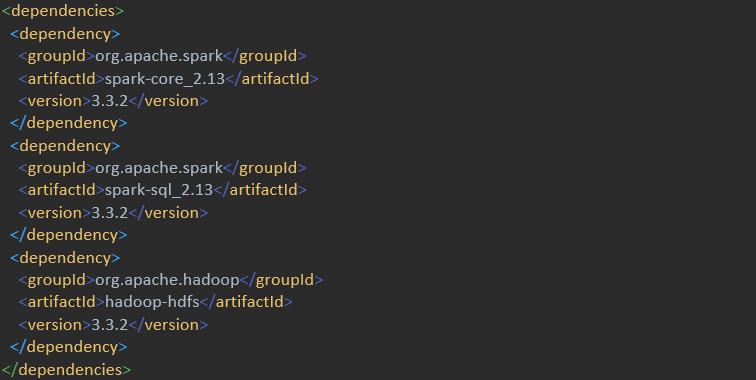

Pom Dependencies:

Generate Parquet:

Query Parquet:

To avoid any issues while running spark 3.3 code with jdk 17, jvm entries to allow access to jdk internal should be provided. Please find below jvm entries:

–add-opens=java.base/java.lang=ALL-UNNAMED

–add-opens=java.base/java.lang.invoke=ALL-UNNAMED

–add-opens=java.base/java.lang.reflect=ALL-UNNAMED

–add-opens=java.base/java.io=ALL-UNNAMED

–add-opens=java.base/java.net=ALL-UNNAMED

–add-opens=java.base/java.nio=ALL-UNNAMED

–add-opens=java.base/java.util=ALL-UNNAMED

–add-opens=java.base/java.util.concurrent=ALL-UNNAMED

–add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED

–add-opens=java.base/sun.nio.ch=ALL-UNNAMED

–add-opens=java.base/sun.nio.cs=ALL-UNNAMED

–add-opens=java.base/sun.security.action=ALL-UNNAMED

–add-opens=java.base/sun.util.calendar=ALL-UNNAMED

–add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED